Week of June 7th

New updates: Knowledge AI, Conversation Builder, Conversation Assist, Conversational Cloud Infrastructure

Features

Rich answers in the On-Demand Recommendations widget are now editable

Rich answers include images and links to content, audio, and video. Rich answers are more engaging than plain answers, so for many brands they’re an important part of a Conversational AI solution.

In this release, we’re pleased to announce that your agents can now edit the text in rich answer recommendations that are offered in the On-Demand Recommendations widget. Here’s how:

If you’re using answer recommendations that are enriched via Generative AI, be aware that answers that are both rich (i.e., have multimedia) and enriched (i.e., are generated via Generative AI) are supported but not recommended. This is because the generated answer might not align well substantively with the rich content associated with the highest scoring article, which is what is used.

Features

Our LLM Gateway now offers URL post-processing

In Messaging contexts, URLs that are clickable are always handy and support an optimal experience for the end user. However, responses from the Large Language Model (LLM) service — which are formed via Generative AI — are always returned as plain text.

That’s where our new URL post-processing service in the LLM Gateway comes in. It takes all the URLs in the response and wraps them in HTML tags, so they become active, clickable URLs.

By default, the URL post-processing service is on for KnowledgeAI for all accounts. To turn it off, contact your LivePerson representative.

Enhancements

More flexibility when using answers enriched via Generative AI

Generally speaking, within a bot, you might want to use enriched answers in some interactions but not others. Previously, this wasn’t possible because the setting to turn on enriched answers was at the knowledge base level (in Settings). If you turned it on, any bot that used the knowledge base used enriched answers.

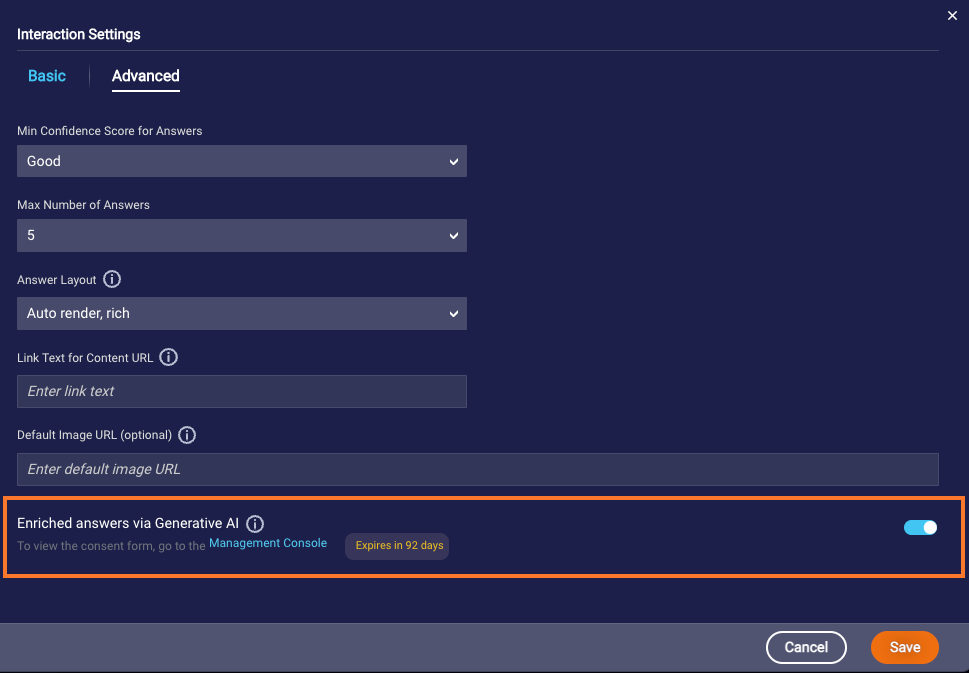

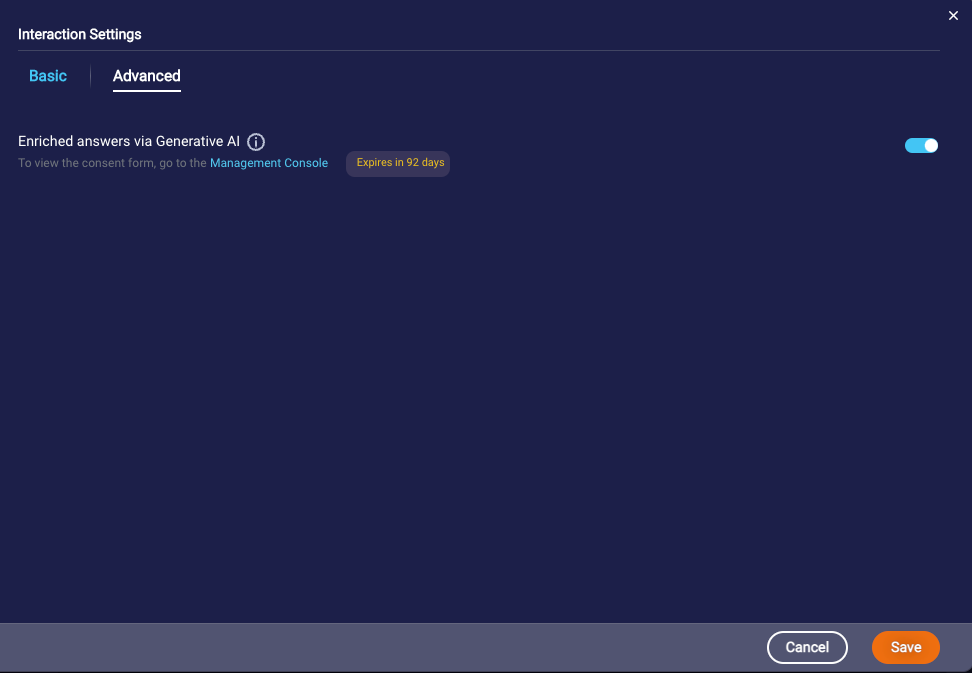

In this release, we’ve moved the Enriched answers via Generative AI setting to the interaction level. This gives you greater flexibility. Within a bot, you can turn it on for some interactions but not others. Or, you can turn it on for every applicable interaction. The choice is now yours.

Where’s the new interaction-level setting? In the KnowledgeAI interaction, you’ll find it on the Advanced tab in the interaction’s settings. (Typically, you use the KnowledgeAI interaction in Messaging bots.)

In the Integration interaction, the display of the setting is dynamic. You’ll find it on the Advanced tab in the interaction’s settings once you select a KnowledgeAI integration in the interaction. (Typically, you use the Integration interaction in Voice bots.)

An enhanced Preview experience when using answers enriched via Generative AI

Conversation Builder’s Preview tool plays an important role as you design, develop, and test a bot. We’ve just updated it so that now, when automating answers enriched via Generative AI, the tool passes some conversation context to the LLM service that does the answer enrichment. The result? You’ll see better enriched answers while you’re previewing the bot. And you’ll have a consistent experience when using:

- Preview

- Conversation Tester

- A deployed bot

With the change to Preview in this release, all of the above now pass conversation context to the LLM service.

Keep in mind that, semantically speaking, you should always get the same answer to the same question when the context is the same. But don’t expect the exact wording to be the same every time. That’s the nature of Generative AI at work creating unique content.

Also keep in mind that when using KnowledgeAI’s Answer Tester, no conversation context is involved. So these results will be slightly different.

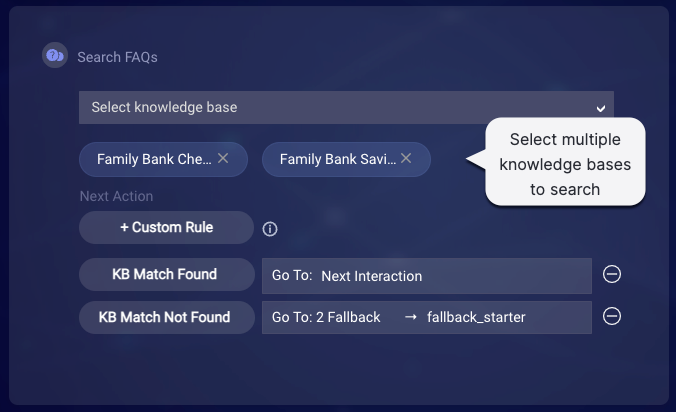

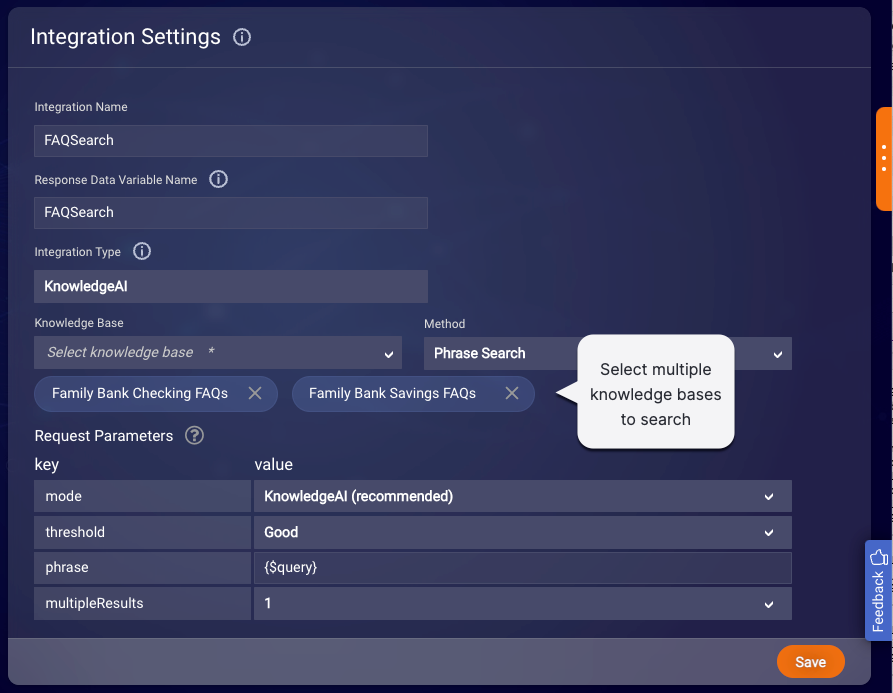

Search multiple knowledge bases in a single interaction

Want more flexibility when automating answers in a bot? You can now search multiple knowledge bases in a single interaction.

KnowledgeAI interaction that specifies multiple knowledge bases

Integration of type “KnowledgeAI” that specifies multiple knowledge bases

Limitations

- You can select a max of 5 knowledge bases.

- The first knowledge base that you select filters the list of remaining ones you can choose from:

- All of the knowledge bases must belong to the same language group. For example, UK English and US English are fine together. Behind the scenes, the system validates the first two characters of the language code.

- You can select a mix of internal knowledge bases and external knowledge bases that use LivePerson AI. But you can’t mix external knowledge bases that don’t use LivePerson AI with any other type.

How the search works

Searching multiple knowledge bases works like searching a single one:

1 - Find the article matches in the knowledge bases.

2 - Aggregate the results, and keep only those in one of the following groups (listed in order of priority): exact match, intent match, AI Search match, fallback text match.

3 - Sort the results in the group by confidence score.

4 - Return the top results based on the number of answers requested.

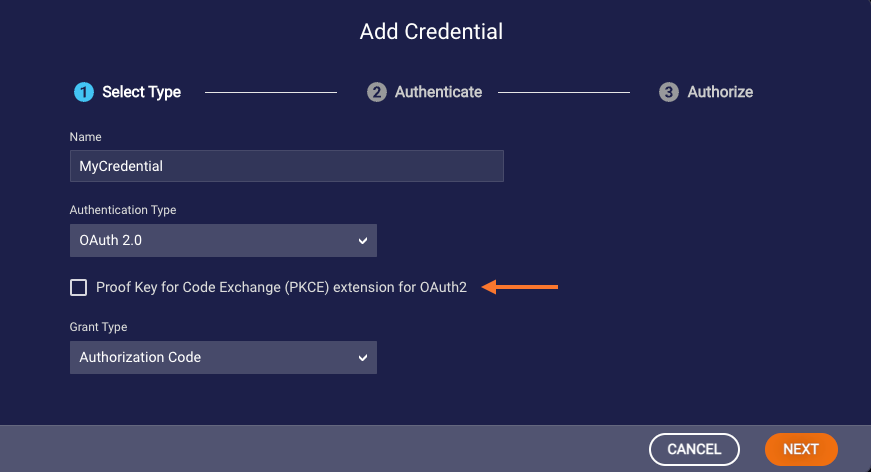

OAuth 2.0 credentials now support PKCE

When creating an OAuth 2.0 credential using the Authorization Code grant type, you can now extend the authorization code grant flow using Proof Key for Code Exchange (PKCE).

PKCE provides additional security by requiring a code challenge and code verifier to be used in the authorization process. These prevent cross-site request forgeries (CSRF) and authorization code injection attacks.

Fixes

Voice bots now automatically disregard consumer interruption functionality that applies to Messaging bots

Voice conversations are different from messaging ones, so Voice bots must handle consumer interruptions differently than Messaging bots. Since this is the case, previously you had to manually disable the functionality that applies to Messaging bots, so that the functionality that applies to Voice bots could be used. You did this by adding the system_handleIntermediateUserMessage environment variable to a bot environment, setting the variable to false, and linking the environment to your bot.

As of this release, we’ve fixed things so that this manual step is no longer needed. Voice bots now automatically disregard the consumer interruption functionality that’s meant for Messaging bots. Instead, Voice bots use their own consumer interruption handling functionality by default.

Features

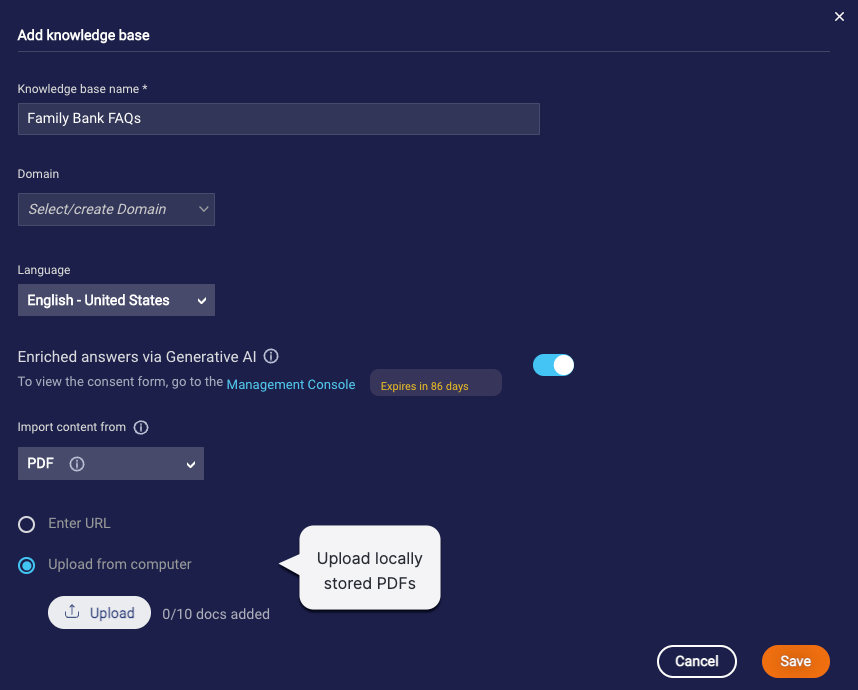

Populate a knowledge base by uploading a PDF from your computer

If you want to populate a knowledge base with the content in a PDF that’s stored on your computer, now you can. This option is now available. (Previously, you had to specify the URL of a PDF in a publicly accessible location: Google drive, Web site, etc.)

Features

Enhanced Navigation Bar

After considering user feedback, LivePerson has addressed some key concerns:

- Streamlining Access to Frequently Used Applications: Reducing the number of clicks required to access commonly used applications.

- Mitigating Accidental Hovering in the Navbar: Preventing unintended menu expansion caused by accidental hovering.

Updates to the navigation menu will take place in Feb 2024.

What enhancements would affect the user experience?

Pin frequently used applications

The Agent Workspace and Manager Workspace were identified as the most frequently utilized applications, hence, these applications would now be pinned above all sections.

Note: The mentioned applications will no longer be available through the original category they used to be available through (Engage).

Labeled sections and applications

All categories and the pinned applications mentioned earlier will now be labeled, eliminating the need for users to remember which symbols correspond to them.

Menu behavioral change

The navbar won't expand when hovered over; instead, it will expand only when:

- One of the categories is clicked.

- The hamburger icon is clicked.

Menu structural change

The navbar categories order was changed, and now it is: Engage, Automate, Optimize, Manage.

The full details about the upcoming change can be found here.

Features

Automatic Encryption Key Rotation

LivePerson customers can enable data-at-rest encryption of conversation transcripts and other engagement attributes. Enabling encryption ensures that data is stored encrypted across the Conversational Cloud datastores and protects the data from potential unauthorised access.

An Automatic Encryption Key Rotation feature has been introduced that can rotate Encryption Key at the desired interval configured by your LivePerson account team.