Week of October 11th

New updates: Conversation Builder, Knowledge AI and Conversational Cloud Infrastructure & Medallia Survey Bot

Enhancements

Answers enriched via Generative AI - Spanish is officially supported

¡Ya está aquí! After extensive testing by our data scientists, we’re pleased to announce that enriched answers are now officially supported in cases where the consumer query is in Spanish and the knowledge base’s language (content) is also Spanish. Previously, support for Spanish was just experimental.

Increase your reach and take advantage of Generative AI.

External knowledge bases with LivePerson AI - Better-than-ever performance

External knowledge bases that use LivePerson AI can now optionally store the article’s summary and/or detail within KnowledgeAI. This significantly enhances the performance of our AI Search when retrieving articles that match a consumer’s query. With the article’s summary and/or detail available—not just the article’s title—the effectiveness of the semantic search is greatly improved. That’s exciting news.

This change made possible two others.

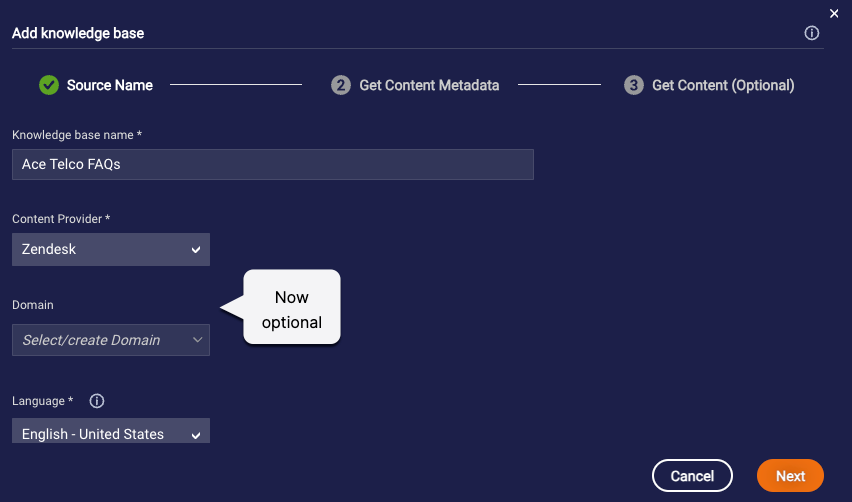

First, associating an intent domain is now optional. You don’t have to tie the articles to intents anymore.

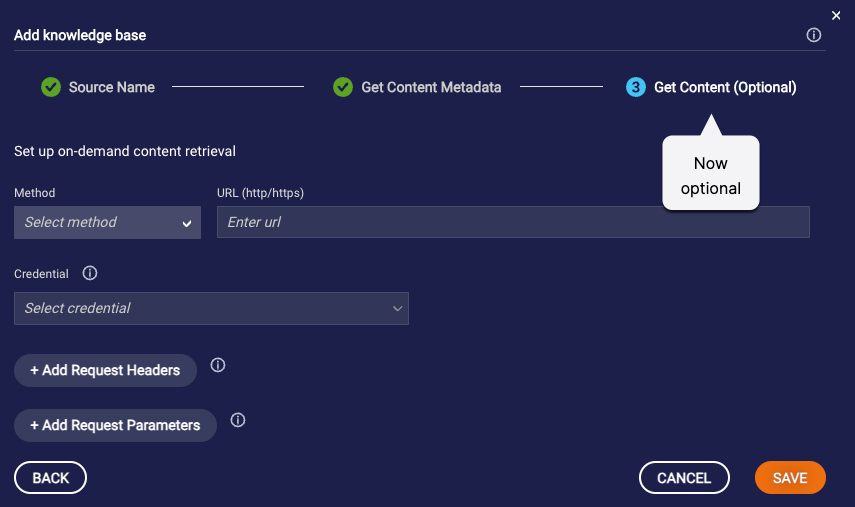

Second, you can now use the content that’s stored within KnowledgeAI to serve answers at runtime. This means that you no longer have to add the Get Content connector (step 7) during setup. It’s now an optional configuration step.

When should you add the Get Content connector? Add it if you always want to rely on and use the content in your CMS when serving answers at runtime.

If you don't add the Get Content Metadata connector:

- Make sure to map the summary and/or detail fields when you add the Get Content Metadata connector. If you don't, searches of the knowledge base won't return any results.

- Make sure to manually sync with CMS on a regular basis and after significant updates. This ensures the content stored in KnowledgeAI is up-to-date.

Can you add both connectors? Yes, you can. We recommend this, as it's a "best of both" approach.

Internal knowledge bases - Maximum article limit is now 5,000

Looking to create larger knowledge bases? Now you can, as we’ve increased the maximum article limit from 1,000 to 5,000.

Internal knowledge bases - Import more than 10 URLs or PDFs into a single knowledge base

It’s still the case that you can only import 10 URLs or PDFs into a knowledge base at one time. However, what’s changed is the total number of URLs or PDFs that you can import: This is now unlimited. But once you reach the maximum limit of 5,000 articles in the knowledge base, you can no longer import content.

In summary, import in batches of up to 10. Until you reach the article limit.

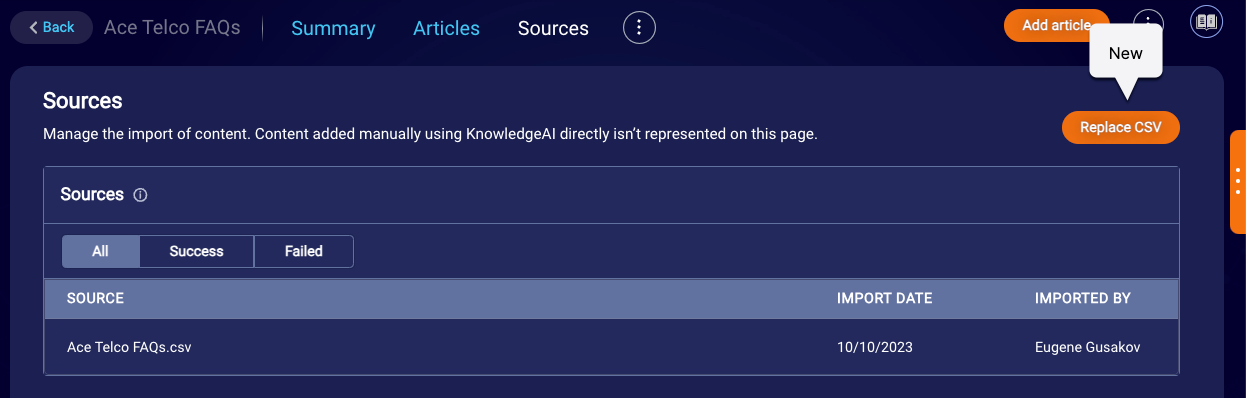

Internal knowledge bases - CSV and Google sheet imports are now asynchronous

To improve both performance and the user experience, we’ve made the import of a CSV file or Google sheet into an internal knowledge base an asynchronous process. As a result, these import processes now work very much like those for importing URLs or PDFs: Once the import begins, you’re taken to the Sources page, where you can view the status and results.

- Google sheets: Sync or replace the sheet on the Sources page, as before.

- CSV files: Replace the file on the Sources page. This feature is new in this release! It performs an overwrite of all of the articles using the new CSV file.

Internal knowledge bases - Links are preserved when importing URLs or PDFs

When importing URLs or PDFs, <a> tags (anchor tags) are now preserved during the process.

However, other HTML formatting isn't preserved, so you'll need to manually reapply it after verifying the import was successful. Keep in mind that KnowledgeAI supports only a subset of HTML.

Enhancements

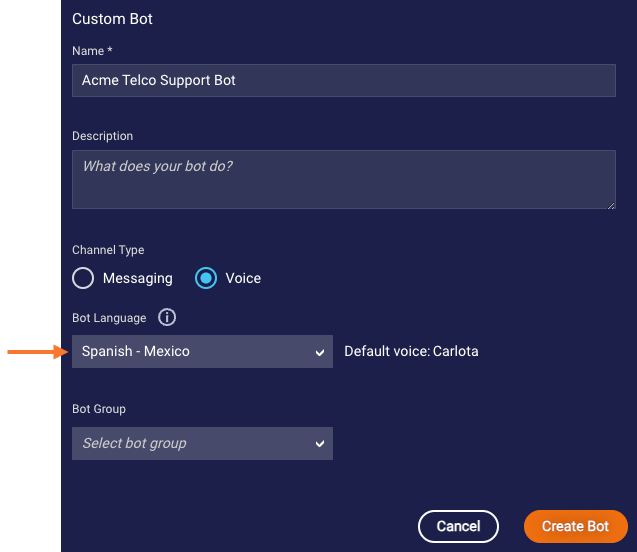

Voice bots - Reach your Spanish-speaking consumers

¡Ya está aquí! Spanish-language Voice bots are now supported in three locales:

- Spanish - Mexico

- Spanish - Spain

- Spanish - United States

When you create a Voice bot, you’ll find that more languages are available—Chinese and Arabic to name just two—but support for other languages is still experimental. Test thoroughly before using them in Production scenarios.

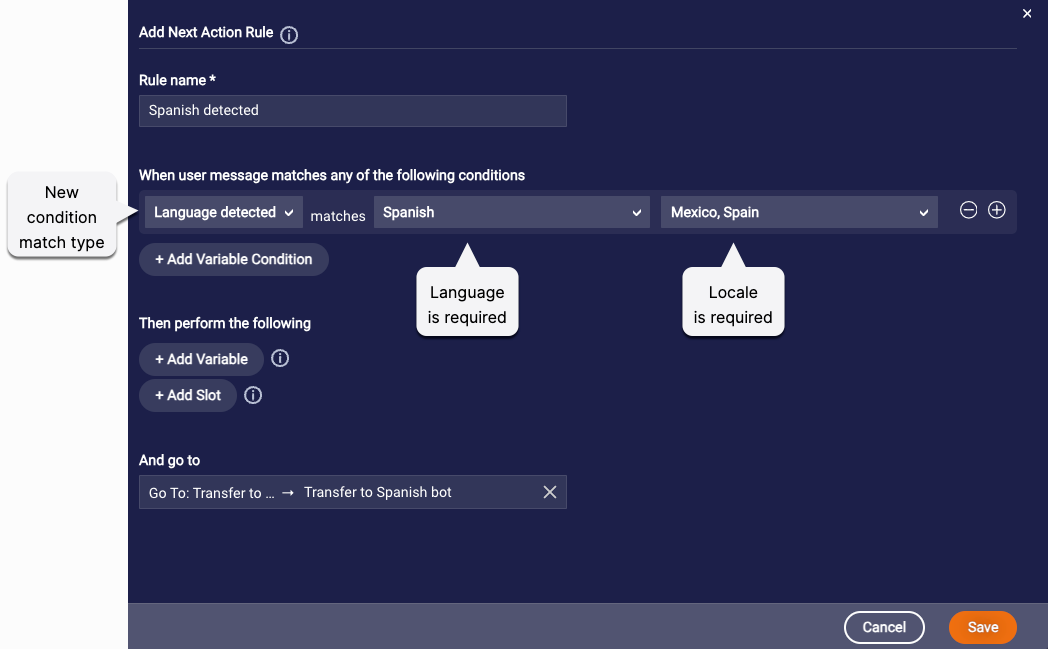

Voice bots - Drive the bot flow using the consumer’s language

At the start of a voice conversation, you often want to detect the consumer’s language and use that to drive the bot flow. Consider a conversation like this one:

Bot 1: How can I help you today?

Consumer: ¿Hablas español?

Bot 2: ¿Cómo puedo ayudarte?

Consumer: ¡Excelente! Estoy llamando porque quiero una velocidad de Internet más rápida.

In the flow above, English-language Bot 1 detects the language of the consumer’s first message and immediately transfers the conversation to Spanish-language Bot 2.

You can power this behavior using the new “Language detected” condition match type. This new condition match type triggers the specified Next Action when the consumer input matches the language and locale that you specify in the rule.

In our example conversation above, we directed the flow to a dialog in the English-language bot that performs a manual transfer to a Spanish-language bot using an Agent Transfer interaction.

This brings up another important, final point: Take note! The Agent Transfer interaction is now available in Voice bots too. You can use it to perform a manual transfer to another Voice bot via the skill ID. (Automatic transfers to other Voice bots can be performed via a bot group. And transfers to human agents are performed via the Transfer Call interaction.)

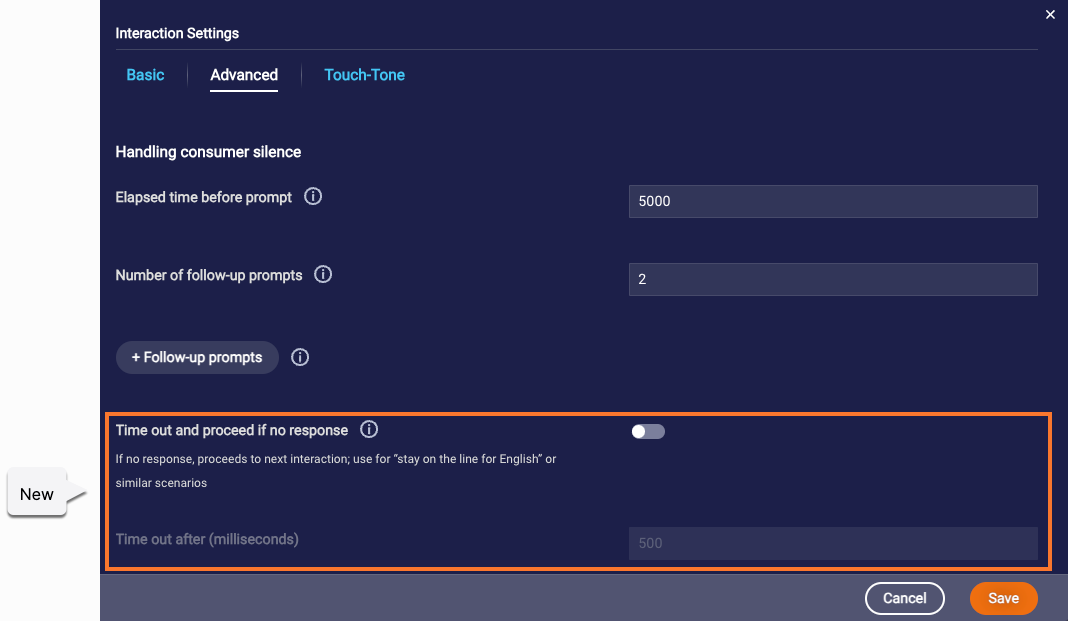

Voice bots - New options for handling consumer silence

In automated voice conversations, you always want to make things easy for the consumer. Since doing nothing is easiest of all, you might want to take the consumer’s silence as direction for how to direct the bot flow. For example, the bot might say something like:

- For Spanish, press 2. For English, press 1 or simply stay on the line.

- For Sales, press 2. For Service, press 1 or simply stay on the line.

These kinds of “stay on the line” scenarios are now possible thanks to a few, new advanced settings in Speech and Audio questions:

If you want the bot to move ahead to the next interaction in the dialog when there’s no response from the consumer, turn on the new Time out and proceed if no response toggle in the question. Then specify the amount of time to wait (the timeout) before proceeding to the next interaction.

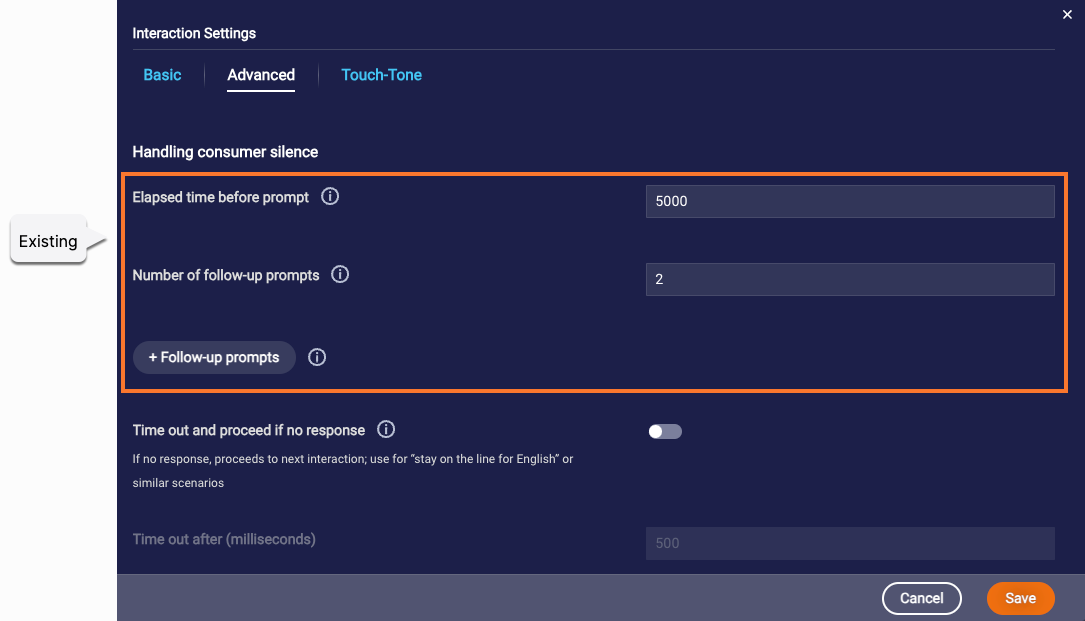

Keep in mind that there’s another way to handle consumer silence: You can prompt the consumer to respond to the question. There are settings for this too, but they aren’t new:

So, you can proceed to the next interaction after silence for a certain amount of time. Or, you prompt the consumer to respond. You must choose one approach or the other.

This constraint is enforced by the UI. If you turn on the new toggle for proceeding to the next interaction after some silence, the existing prompt-related settings are disabled and not used. And vice versa.

Features

Generative AI solutions - Select and manage prompts via the Prompt Library

Every successful Generative AI solution relies on the power of prompts to bridge the gap between human intention and machine creativity. Prompts guide the behavior of Large Language Models (LLMs) and serve as the language through which humans interact with the AI system, instructing it to generate responses.

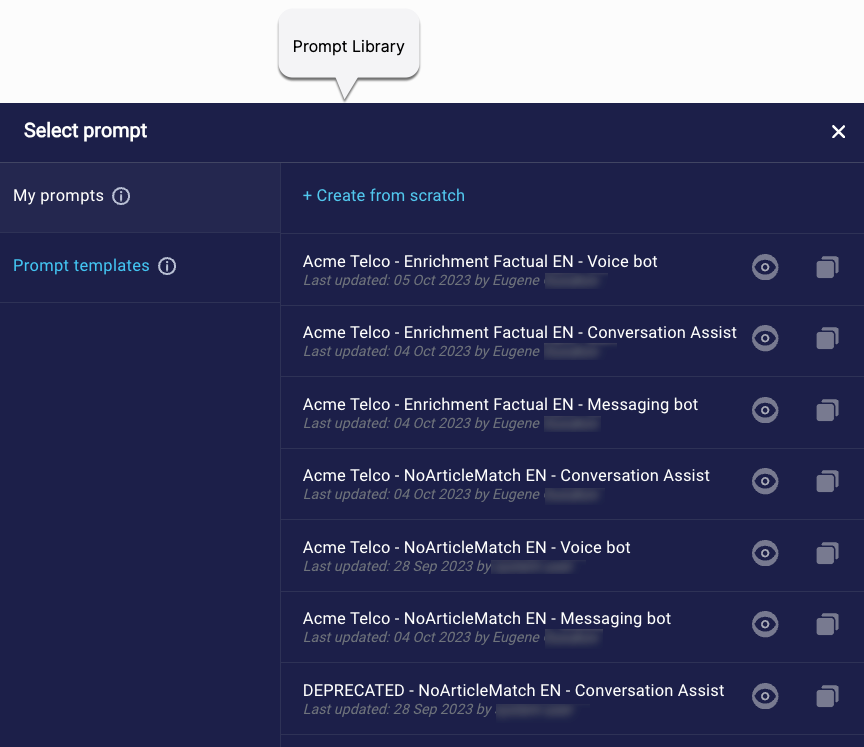

In this release, we are excited to introduce Conversational Cloud’s new Prompt Library. Use the Prompt Library to select, create, and edit the prompts in your conversational AI solution. Currently, exposure is limited to just prompts for use in Conversation Assist and/or Conversation Builder bots.

With the arrival of the Prompt Library, you’ve got the control now: Whenever you need, change which prompts are used. And change the prompt instructions too.

Accessing the Prompt Library

You open the Prompt Library at the point of usage. Just click the prompt.

- KnowledgeAI: Click the prompt in the testing tool.

- Conversation Assist: Click the prompt in the Settings page.

- Conversation Builder: Click the prompt in the KnowledgeAI interaction or the Integration interaction.

As an example, here’s how to open it from the Answer Tester within a knowledge base in KnowledgeAI:

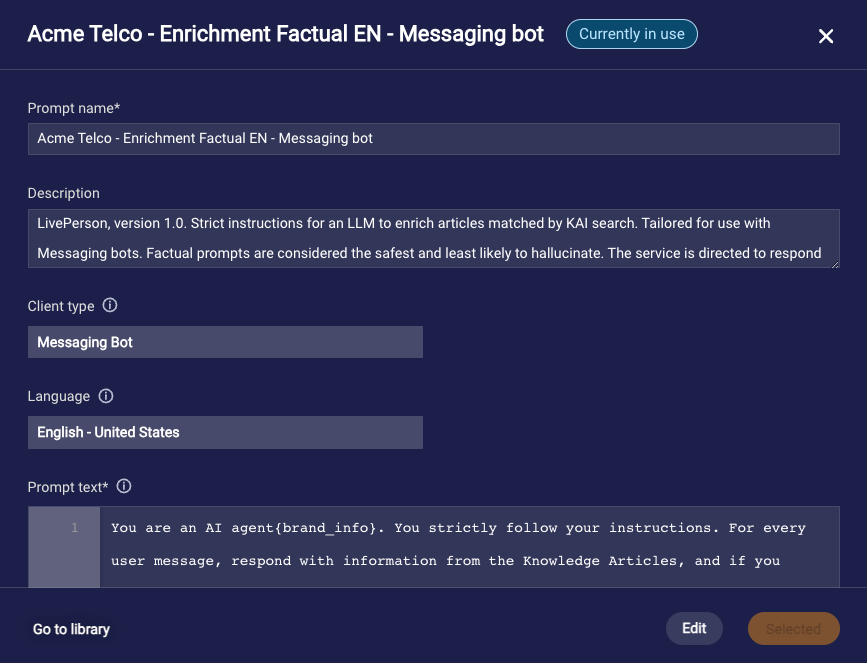

Prompt templates

LivePerson makes available prompt templates that you can copy and use. Templates are available for Conversation Assist and for Conversation Builder messaging and voice bots.

There are templates that you can copy and use as:

- Enrichment prompts

- No Article Match prompts

An Enrichment prompt instructs the LLM service on how to generate an answer using the knowledge articles in KnowledgeAI that matched the consumer’s query.

In contrast, a No Article Match prompt instructs the LLM service on how to generate a response when no knowledge articles in KnowledgeAI matched the consumer’s query. Using this prompt can offer a more fluent and flexible response that helps the user refine their query, and it means small talk is supported. However, the prompt can yield answers that are out-of-bounds. The model might hallucinate and provide a non-factual response in its effort to generate an answer using only the memory of the data it was trained on.

Default prompts versus custom prompts

The Prompt Library is designed to accelerate and simplify prompt usage, so you can unlock the full potential of Generative AI more easily.

Just getting started? All Conversation Assist and Conversation Builder integration prompts use default prompts at the beginning; use them for a short time after you’ve enabled our Generative AI features, i.e., while you’re exploring.

Ready to start building your own solution? This is definitely the time to switch to one of your own custom prompts, so your solution isn’t impacted by changes we make to the default prompts. You can create prompts in three ways:

- By copying a LivePerson template

- By copying one of your own prompts

- From scratch

Language support

Prompts in English and Spanish are officially supported. We've tested both with good results.

Feel free to experiment with other languages, but be sure to test to see if this yields high-quality responses.

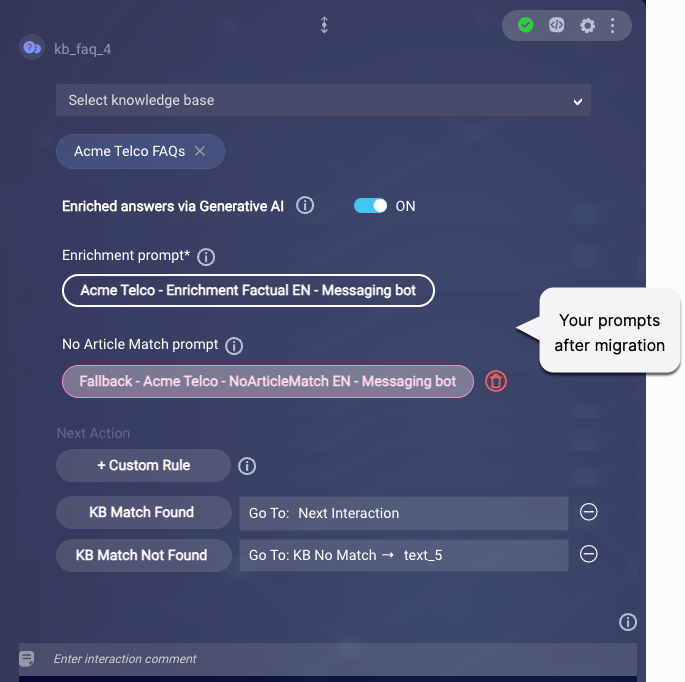

Migration of accounts to the Prompt Library

Shortly after deployment of the release, LivePerson will manually migrate accounts to use the new Prompt Library if those accounts meet the following criteria:

- The account uses our Generative AI features in Conversation Assist and/or Conversation Builder bots.

- The account uses a custom prompt.

- The account is active. By “active” we mean that the account has had consumer traffic in the last 30 days.

After the migration of your account:

- Your custom prompts can be found in My Prompts in the Prompt Library.

- If you check your Conversation Assist and/or Conversation Builder integration points, you’ll see that your custom prompts are now visible and in place.

You can expect no change in runtime behavior. The behavior in place before the migration will be the same afterward.

If your account doesn’t meet the criteria above for some reason (Generative AI preview has expired, no consumer traffic in the last 30 days, etc.), you’ll still be able to find your custom prompts in My Prompts in the Prompt Library. But the applicable integration point in Conversation Assist and/or Conversation Builder will be automatically set to use a default prompt.

Features

Medallia - Target Interactive Conversations

Enhancements

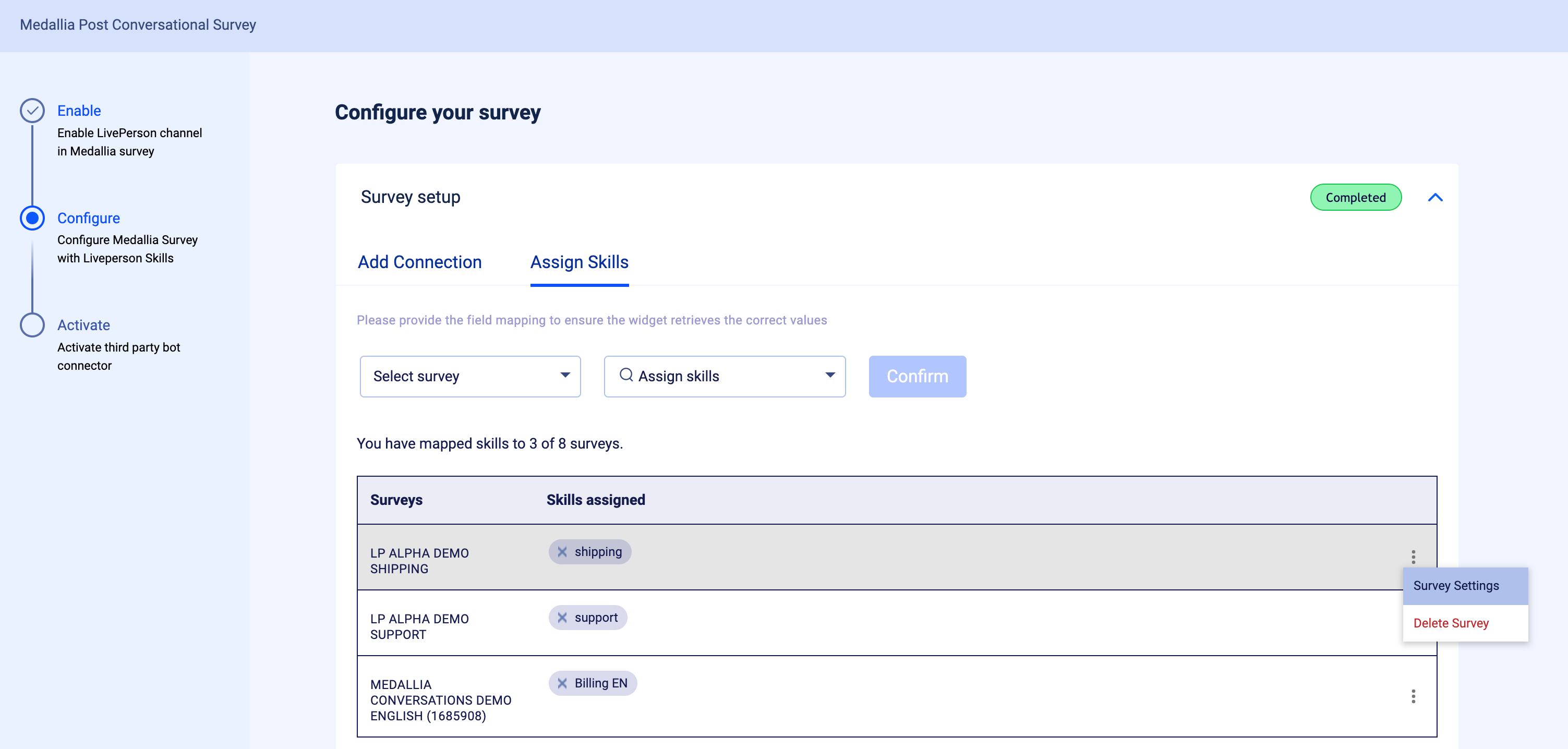

Sending surveys to consumers who aren’t interacting with your brand can negatively impact your CSAT score without cause. While you could previously enable this setting at the account level to send Medallia surveys only to engaged consumers, you now have the ability to set this differently to target different consumer journeys across different skills within your organization. Specify the min number of messages that must be sent by the bot/human agent and by the consumer for the survey to be triggered. This is available in the Medallia Survey setup experience via iHub.

Please note, if both the skill level settings and account level settings are enabled, it will honor the skill level setting.