Week of July 20

New updates Analytics Builder, Proactive Messaging, AI Annotator

Features

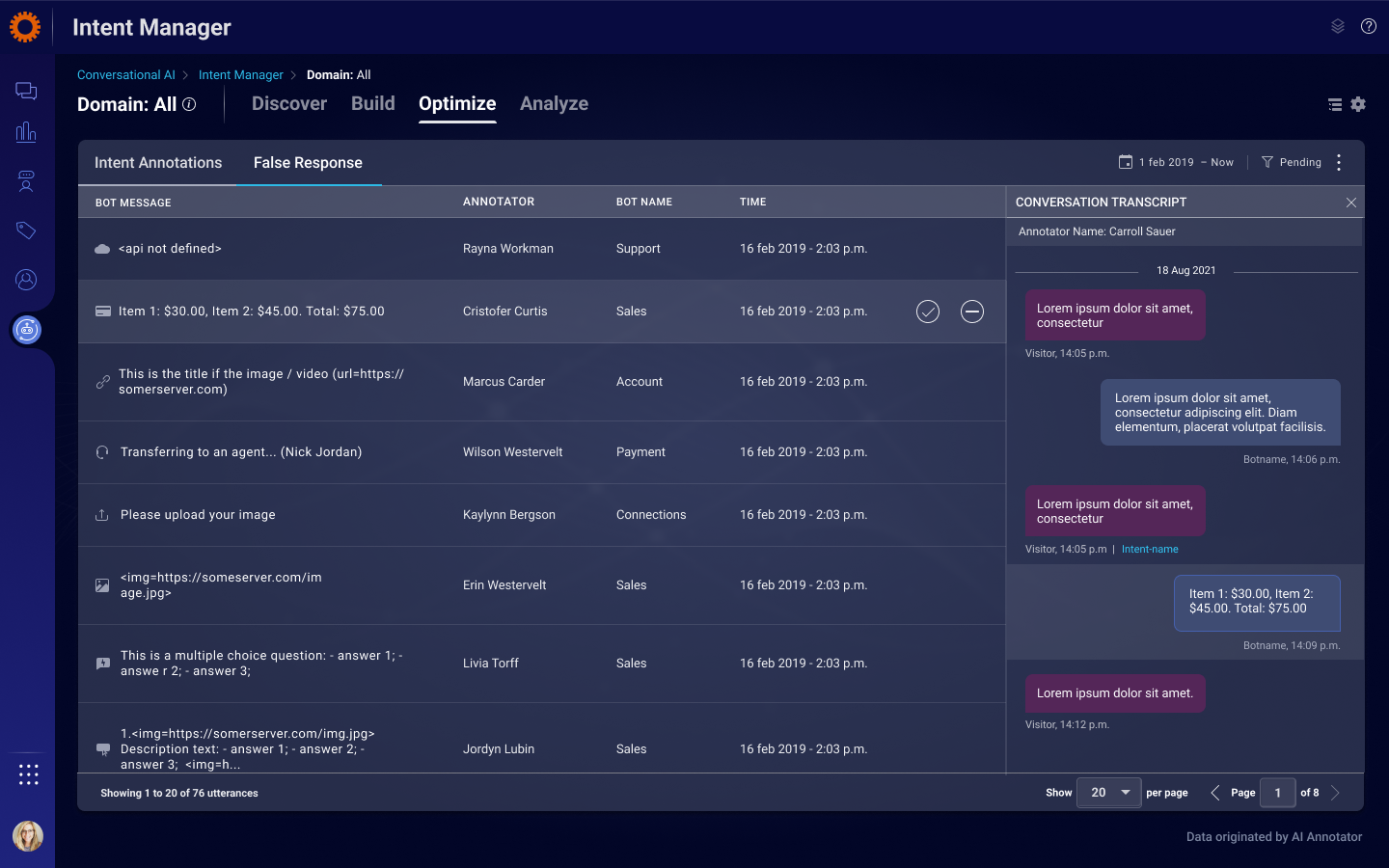

[Analytics Builder] ConversationID available for Survey data

Up until now, the post conversion survey results data in Analytics Builder were aggregated. Hence, users were unable to identify the specific interaction which led to the consumers feedback. As of this version, the following changes have been made:

- Survey Dashboard for Messaging

“List of all conversations” dataset has been added to the datasets section in the dashboard.

Editors can pull the “CONVERSATION” attribute from the “List of all conversations” dataset if they wish to analyze the answers of post conversation surveys or agent surveys.

The conversationID field will be populated starting from the time of the release, and will be empty historically.

List of conversations in Analytics Builder

- “Performance Dashboard for Messaging”, “Agent Operations Dashboard”

The “CONVERSATION” attribute will now show valid results when using metrics which are sourced from ”Survey Answers (Agent and Skill)” data set.

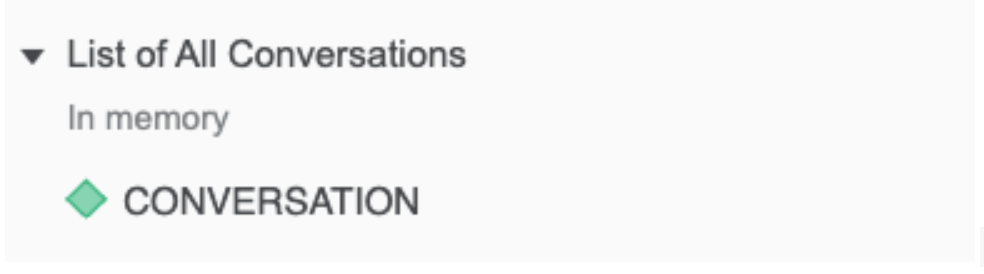

[Analytics Builder] New metrics: Survey Dashboard for Messaging

A new dedicated metric for each possible answer of the post conversation survey predefined questions (CSAT\NPS\FCR) is now available for editors under the ”Survey Answers (Agent and Skill)” dataset.

The dataset is available in the following dashboards: “Survey Dashboard for Messaging”, “Messaging Performance Dashboard” and “Agent Operations Dashboard”.

This is helpful for brands who wish to calculate these metrics differently than the Industry standard definition, or to easily view a histogram of the consumer’s answers.

The added metrics are:

New pre-computed survey metrics added to the survey dashboard.

[Analytics Builder] New metrics: AGENT ONLINE LOAD and AWAY LOAD

One of the key measurements to track the agent utilization is the AVG.AGENT LOAD.

The metric avarges the LOAD of the agent at any of the agent states (online, back soon and away), even though the agent load in the away or back soon states is expected to be much lower than in the online state.

In order to differentiate the load in the different states, the below metrics are now available to be used by editors under “Agent Utilization” dataset, exposed in both “Messaging Performance Dashboard” or “Agent Operations Dashboard”.

AVG. AGENT ONLINE LOAD - The average conversation load on an agent in the ONLINE state. The load is determined by the weights assigned to conversations by Smart Capacity. A conversation can have a maximum weight of 1 and a minimum weight of 0.1.

AVG. AGENT AWAY LOAD - The average conversation load on an agent in the AWAY state. The load is determined by the weights assigned to conversations by Smart Capacity. A conversation can have a maximum weight of 1 and a minimum weight of 0.1.

[Analytics Builder] Messaging Performance Report Template

A new folder “Templates” is now available for editors under the "LP predefined Dashboards" folder.

This folder will contain empty report templates, allowing editors to:

- Easily create their own reports from scratch, without any built-in preview

- Quick data exploration, with no need to wait for dashboard visuals to be loaded.

With this release, the folder will only contain one template - "Messaging Performance".

This template should be used to create messaging performance-related reports. It holds the same datasets which are available today under the “Messaging Performance Dashboard”.

[Analytics Builder] New metrics: AVG and Max of First Response SLA

The following new metrics have now been added under the "Agent Segment Conversation" dataset, available under the "Performance Dashboard for Messaging" or the "Agent Operations Dashboard".

AVG.SLA FIRST RESPONSE - The average time taken to first respond to the consumer, for new conversations or transferred conversations. For transferred conversations, the time is measured from the time of transfer to the time of agent response and is attributed to the time the conversion was assigned to the agent (agent segment start time).

MAX.SLA FIRST RESPONSE - The maximum time taken to first respond to the consumer, for new conversations or transferred conversations. For transferred conversations, the time is measured from the time of transfer to the time of agent response and attributed to the time the conversion was assigned to the agent (agent segment start time).

Enhancements

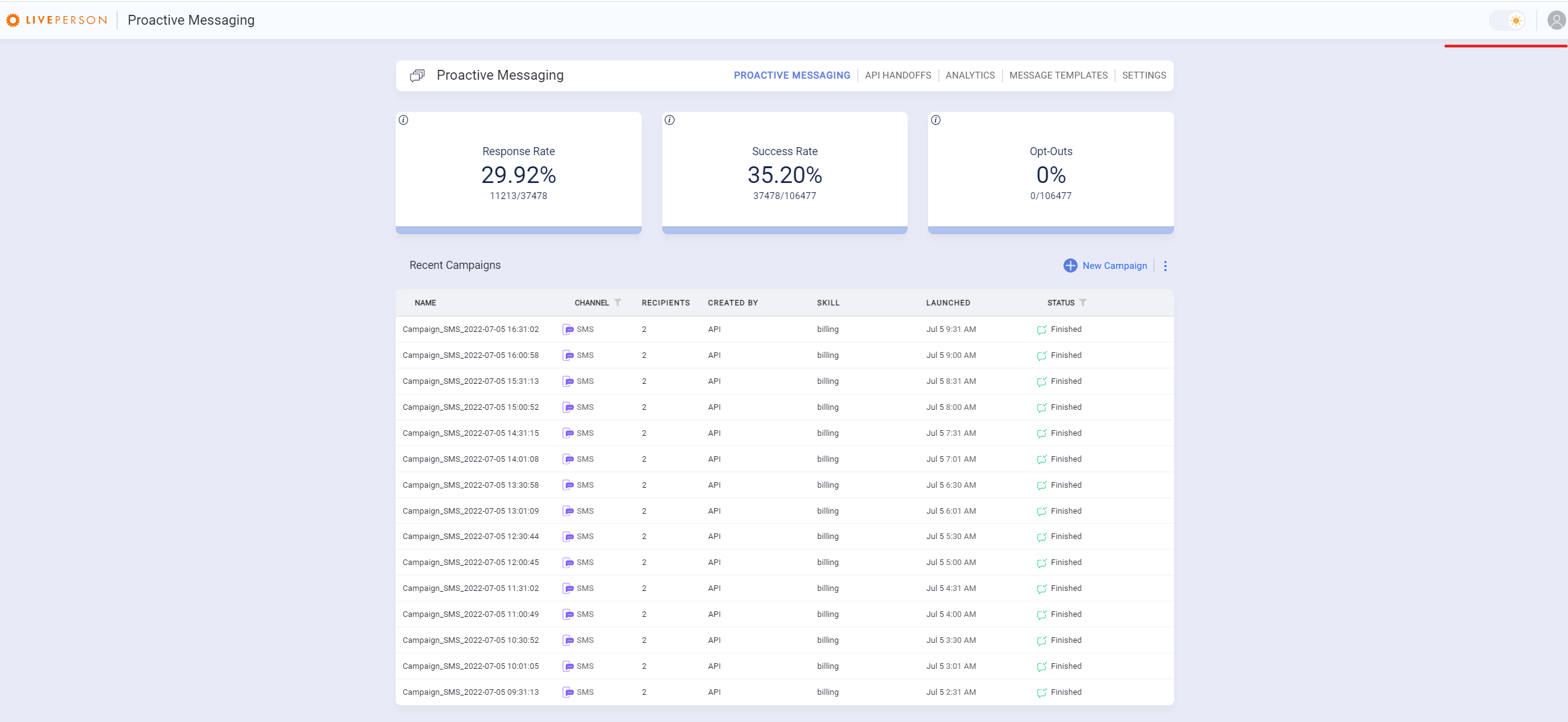

Light theme support for Proactive Web Tool and Connect to Messaging Web Tool

The Proactive and 'Connect to Messaging" Web tool supports the light theme. Simply launch the web tool and turn on the toggle on the top banner to enable the light theme.

proactive messaging light theme

Enhancements

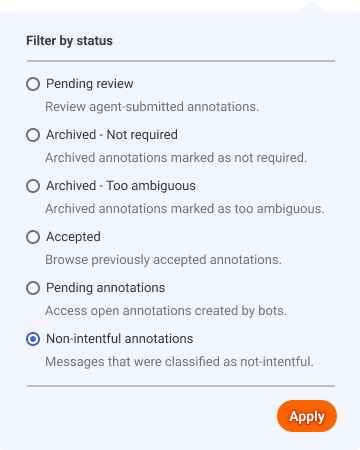

Non-intentful annotation filtering

Messages that bots can not identify become 'Open Annotations' and are actually not intentful, i.e. “trolling” of bots or “Hi” or “Thank you”. These messages can be a very big part of the overall unidentified messages turning into annotations.

Now, our unique classifier can determine whether annotations are intentful and can filter all non-intentful annotations to reduce agent time and effort spent on meaningless annotations by agents - focusing agents on only intentful annotations.

Non-intentful annotations will be available for review in Annotation Management (Optimize tab) in Intent Manager under "Non-intentful annotations" filter and also in All Conversations filters.

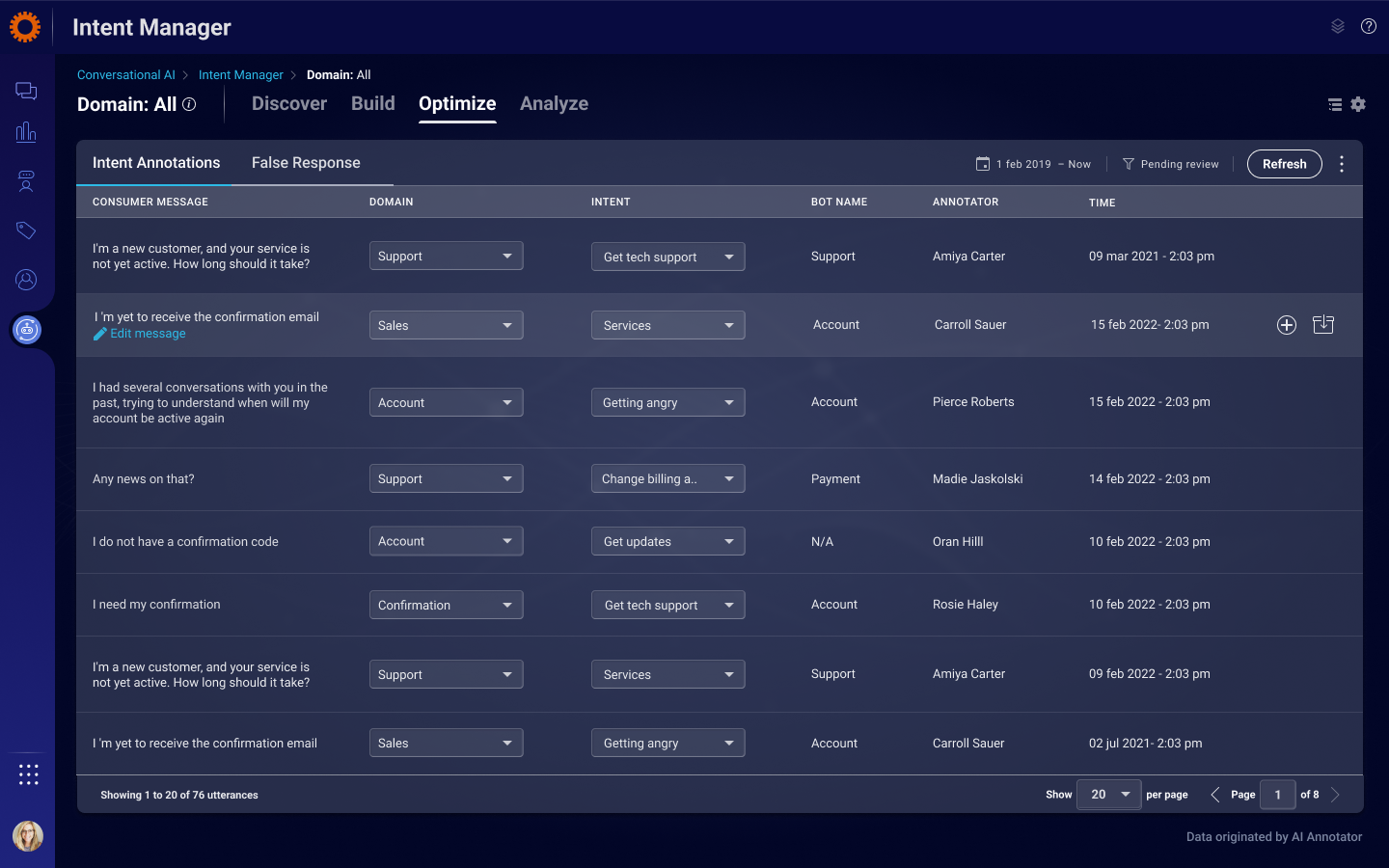

New Annotation Management

When reviewing annotations in the optimize tab, bot tuners are able to edit annotations submitted by the agents to edit typos or words that bring better value to the intent, and propose these as new training phrases to the exact training phrase they would like to add to the intent.

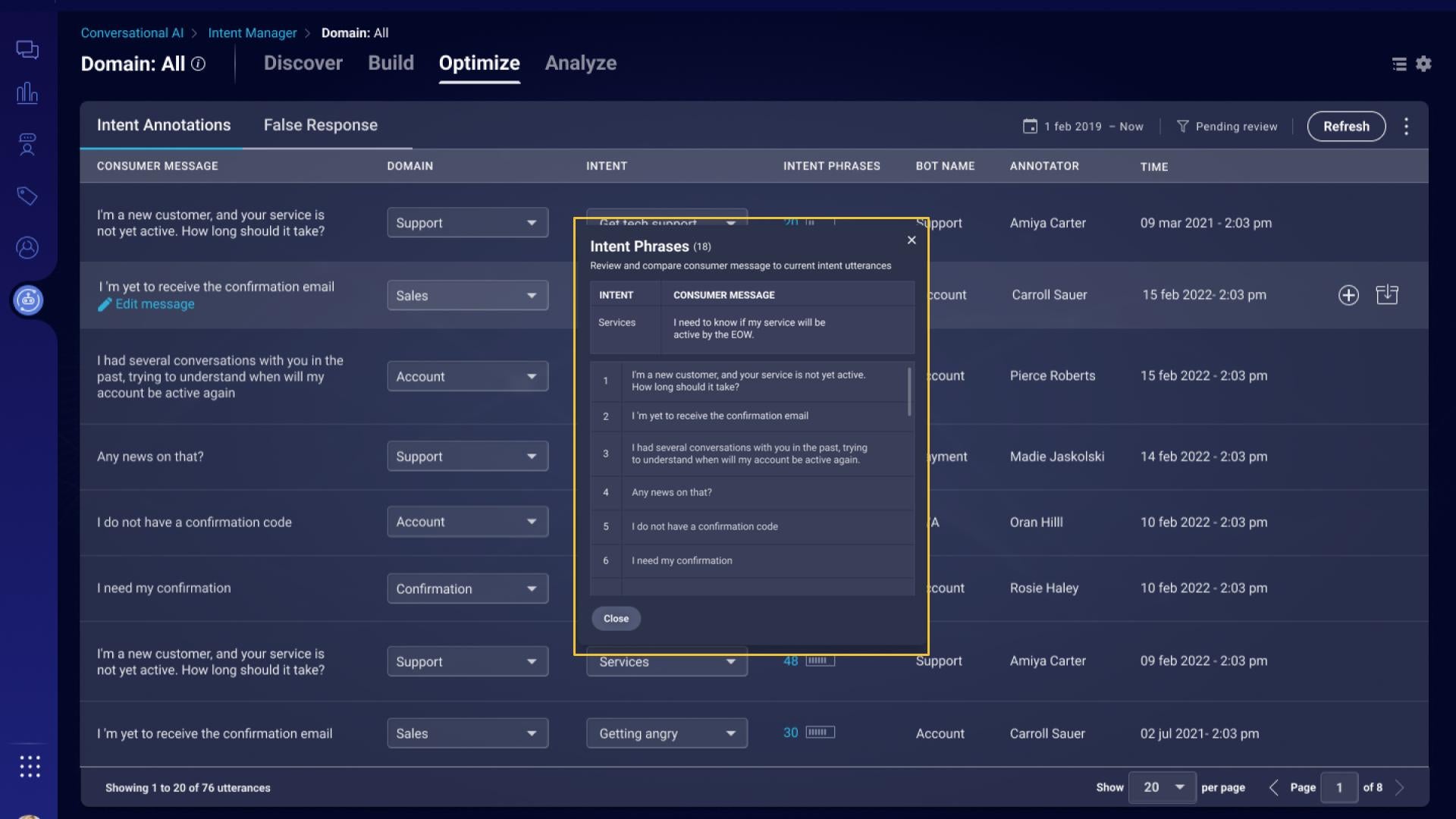

Intent phrases

Easily evaluate which intents you'd like to work on: now, each next to each intent view the amount of training phrases that are currently under this intent (an icon next to the number will show a representation - defaults are 20-30 is sufficient, 30-50 is OK and 50+ is good). Default ranges can be configured by site settings.

In addition, when clicking the number of intents, a dialogue will display all of the current training phrases in that intent for comparison with the new annotation so bot tuners can understand whether or not to accept the annotation as a training phrase.

In addition, we have now added the ability for the bot tuner to filter annotations through different stages of the annotation funnel:

- Pending review - Annotations submitted by the agents

- Pending annotations - Annotations opened by the system

- Non intentful annotations - Annotations opened by the system that was found non intentful

- Accepted - Annotations accepted into intents

- Archived as not required - Annotations archived that were found not required

- Archived as too ambiguous - Annotations archived that were too ambiguous

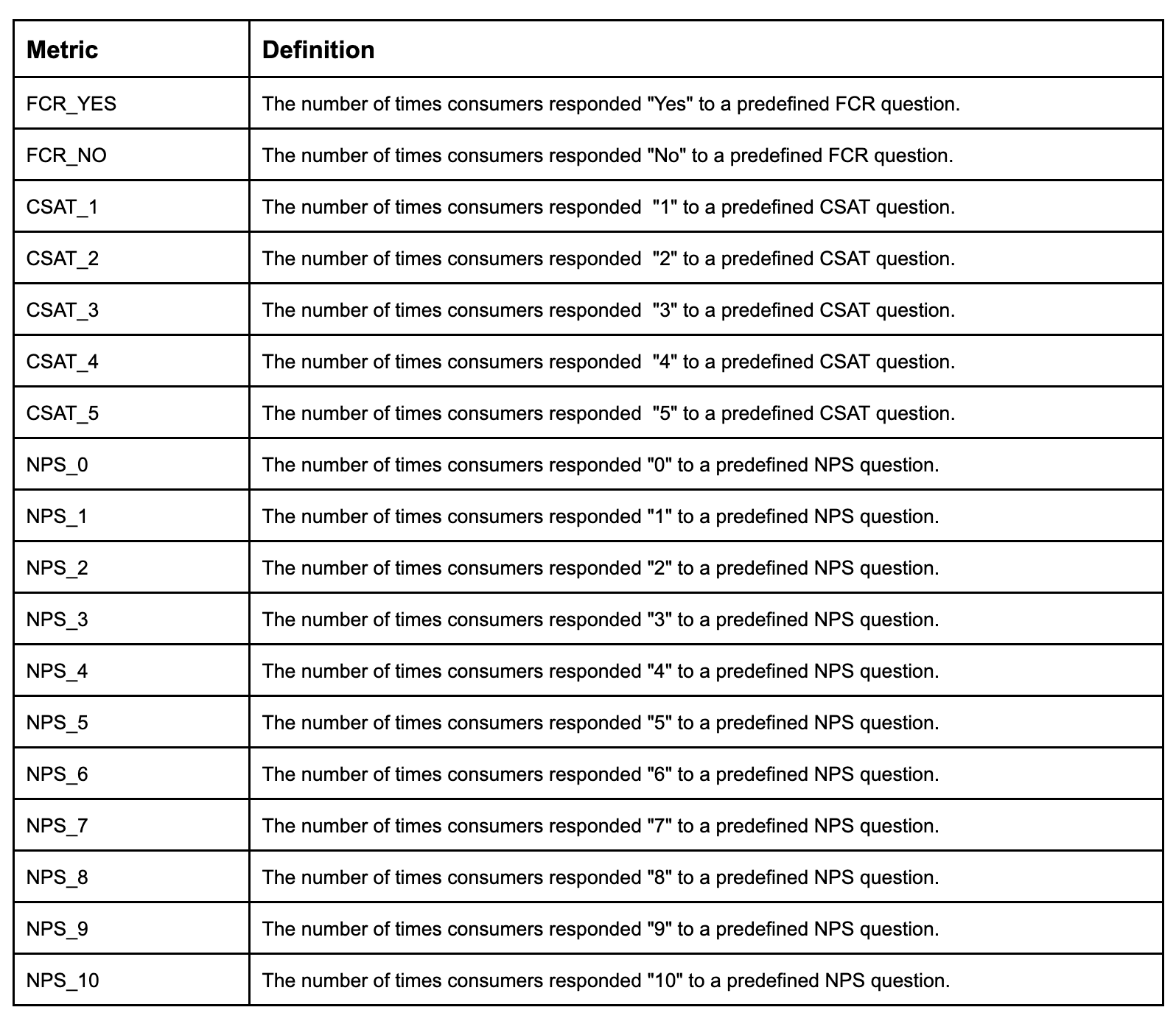

Enriching transcript in false response

For a bot tuner, the work in false response can be detective work. When a bot answers incorrectly to a consumer message, the error options are numerous and it is hard to uncover the cause of the false response in order to resolve the problem.

Now, bot tuners can easily see the classified intent next to each customer message, and navigate directly into that intent and look for the cause of the false response.